CodeGuardian AI

Team: NextGen Coders

Hackathon: Techvoot AI Hackathon 2026

Category: AI for Software Development | Automated Code Review

CodeGuardian AI is an automated code review assistant that analyses source code for quality, security, and standard compliance, delivering clear, actionable feedback before human review begins.

Get A Free Quote

What CodeGuardian AI Really Is

What CodeGuardian AI

Really Is

CodeGuardian AI is an automated code review assistant that analyses source code before it reaches production. It reviews codebases against defined quality, security, and structure checks and returns a clear, organised report that developers can use immediately.

You submit code. The system reviews it systematically. Developers receive feedback before human review even begins. It fits into existing workflows rather than asking teams to change how they already work.

Why the Team Choose This Problem?

Why the Team Choose

This Problem?

As projects grow, manual reviews start to show strain.

- Review depth varies depending on time and reviewer availability.

- Repetitive issues slip through because they’re easy to miss.

- Security checks are often uneven across files.

- Senior developers spend time flagging the same patterns repeatedly.

Over time, this creates technical debt that teams notice only when it slows them down.

CodeGuardian AI was built to introduce consistency at the earliest review stage, without adding overhead to developers already under delivery pressure.

How the Code Review Flow Works

The system is designed as a straightforward review pipeline, not a black box.

Code Submission

Developers upload their project files or code packages for review.

The platform supports structured project layouts and allows teams to focus analysis on relevant source files.

TThis keeps reviews targeted instead of noisy.

Review History Access

Teams can define or apply review rules based on their development standards.

This ensures:

- Reviews remain consistent across contributors.

- Standards are enforced evenly, regardless of who wrote the code.

- Issues are flagged early instead of during final review stages.

Automated Code Analysis

Each file is analysed individually rather than as a bulk scan.

The system checks for:

- Code quality issues.

- Potential security concerns.

- Violations of defined coding standards.

This file-level approach improves accuracy and makes feedback easier to trace back to specific areas of the codebase.

Review Reports & Scoring

After analysis, CodeGuardian AI generates a structured review report.

The report includes:

- Categorised issues.

- Severity indicators.

- File-level references.

- A consolidated quality score for the project.

Reports can be exported and shared, making them useful for audits, internal reviews, or quality tracking over time.

Built in 24 hours. Proven under pressure.

Now imagine what happens with real timelines and real business context.

Talk to Our Experts TodayWhy Development Teams Would Use This

Why Development Teams Would Use This

CodeGuardian AI is not designed to replace peer review. It exists to support it.

- More consistent review standards.

- Reduced manual review load.

- Faster feedback during development cycles.

Teams benefit from:

Human reviewers spend less time catching basics and more time making meaningful architectural decisions.

The Technology Behind the Scenes

- Backend: Laravel

- Database: MySql

- Frontend: HTML5, CSS3, Bootstrap

- Integrations: Open AI

Solution Walkthrough

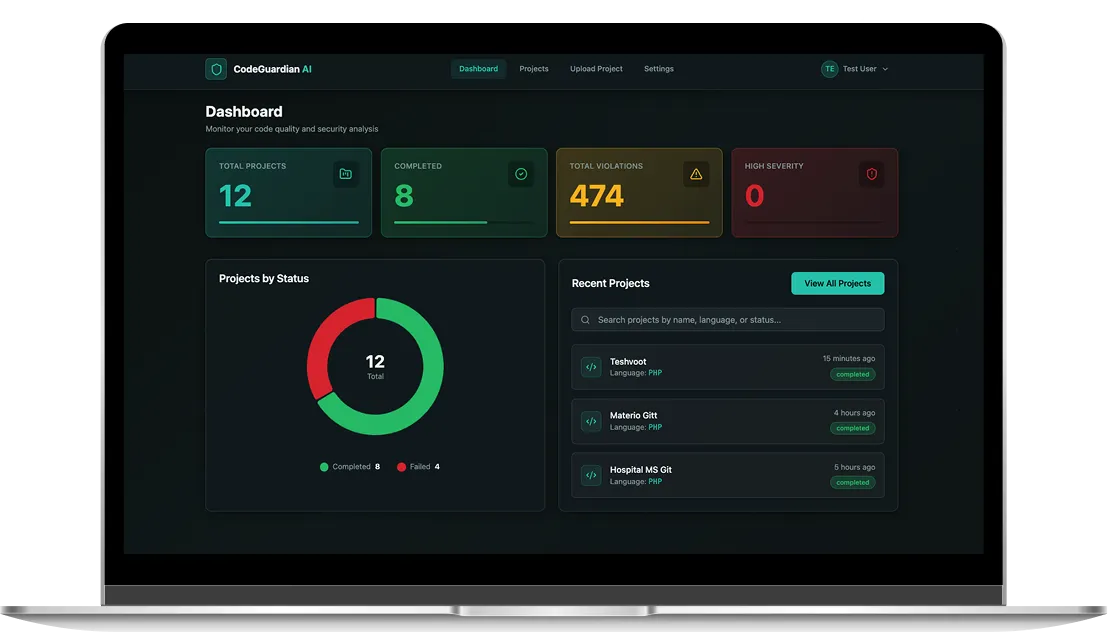

Dashboard:

Gives a snapshot of overall review activity, including total projects analysed, completed scans, violation counts by severity, and recently reviewed projects.

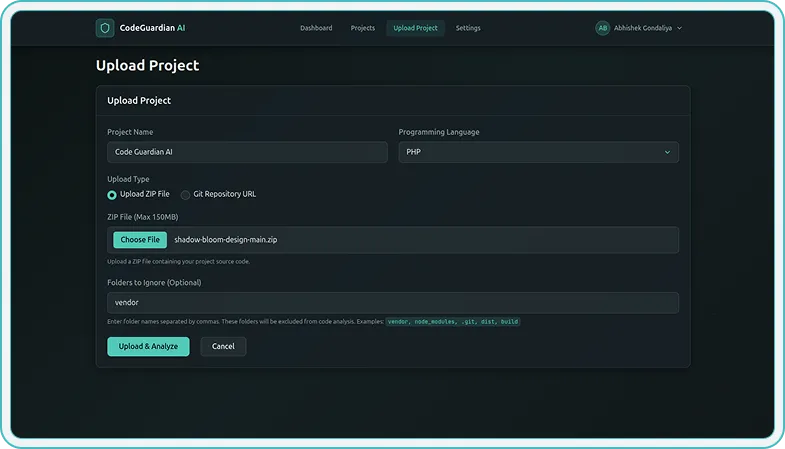

Upload Projects

Allows developers to upload code via ZIP file or Git repository URL, select the programming language, and exclude folders that are not relevant to the review.

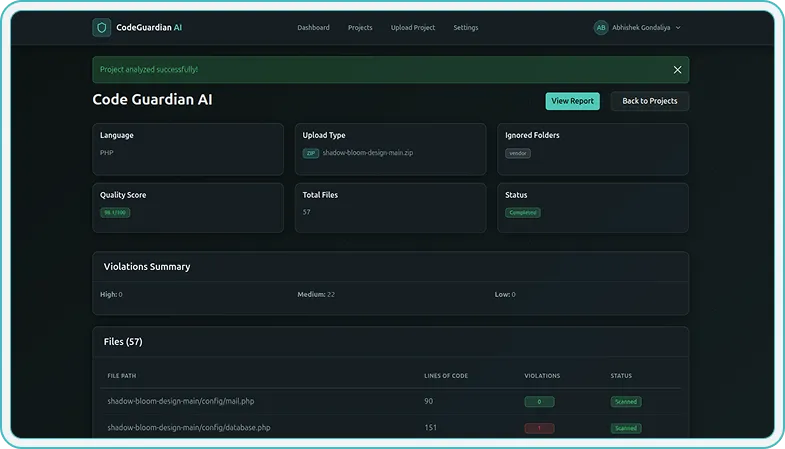

Analytics

Breaks down violations by severity level and file, showing file names, issue counts, status, and lines of code to help teams pinpoint problem areas quickly.

Report & Quality Score

Report & Quality Score Presents visual summaries of violations by category and severity, assigns a quality score out of 100, and lists actionable suggestions for fixing each issue.

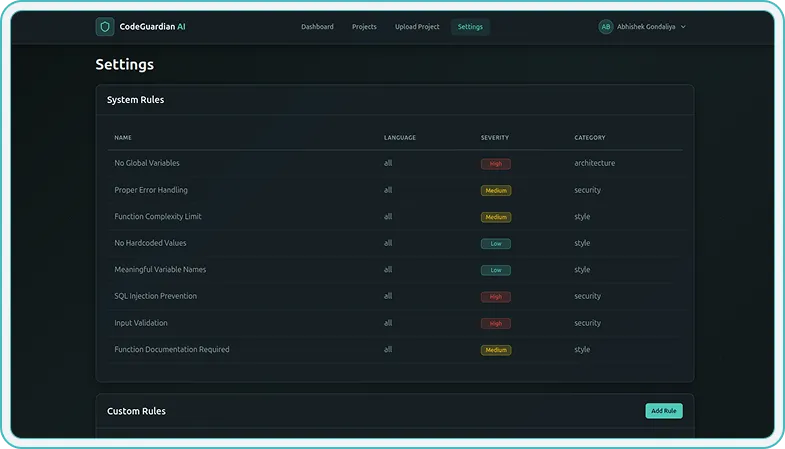

Settings

Displays the predefined system rules used during analysis, allowing teams to review the standards applied during code evaluation.

// We are here to help you

Trusting in Our Expertise

- 30 Hours Risk Free Trial.

- Direct Communication With Developer.

- On-time Project Delivery Assurity.

- Assign Dedicated PM.

- Get Daily Update & Weekly Live Demo.

- Dedicated team 100% focused on your product.

- Sign NDA for Security & Confidentiality.